Event-Driven Architectures: Anti-Patterns

Last time out I covered some battle-hardened patterns in designing and implementing serverless, event-driven applications; for context, at my current gig we employ tens of serverless apps with millions of invocations across them on a daily basis so it's fair to consider these patterns as SaaS-proof.

For my next trick, I'll go over some common pitfalls in designing event-driven serverless applications. These anti-patterns provide general guidance for average use-cases and should not be taken as prescriptive; indeed they could lead to designs that are technically functional but may be suboptimal from an architecture and/or cost point of view. It's constructive to know and keep these in mind nonetheless.

The function monolith

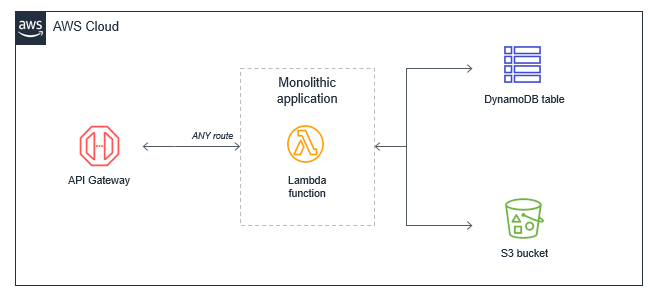

In many serverless applications migrated from traditional servers, EC2 instances or PaaS applications deployed in AWS Beanstalk or Azure App Service, developers “lift and shift” existing code. Frequently, this results in a single Lambda function that contains all of the application logic that is triggered for all events. For a basic web application, a monolithic Lambda function would handle all API Gateway routes and integrate with all necessary downstream resources.

This approach has several drawbacks:

- Package size: the Lambda function may be much larger because it contains all possible code for all paths, which makes it slower for the Lambda service to download and run, especially for "cold" invocations.

- Hard to enforce least privilege: the function’s IAM role must allow permissions to all resources needed for all paths, making the permissions very broad. Many paths in the functional monolith do not need all the permissions that have been granted.

- Harder to upgrade: in a production system, any upgrades to the single function are more risky and could cause the entire application to stop working. Upgrading a single path in the Lambda function is an upgrade to the entire function.

- Harder to maintain: it’s more difficult to have multiple developers working on the service since it’s a monolithic code repository. It also increases the cognitive burden on developers and makes it harder to create appropriate test coverage for code.

- Harder to reuse code: typically, it can be harder to separate reusable libraries from monoliths, making code reuse more difficult. As you develop and support more projects, this can make it harder to support the code and scale your team’s velocity.

- Harder to test: as the lines of code increase, it becomes harder to unit all the possible combinations of inputs and entry points in the code base. It’s generally easier to implement unit testing for smaller services with less code.

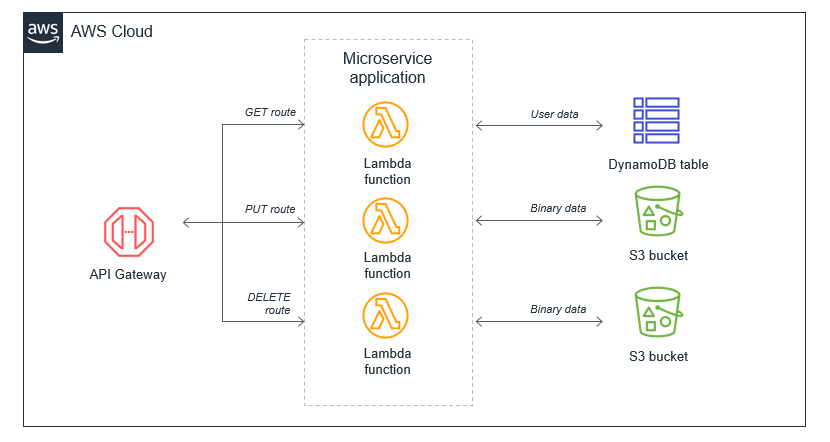

The preferred alternative is to decompose the monolithic Lambda function into individual microservices, mapping a single Lambda function to a single, well-defined task. In this simple web application with a few API endpoints, the resulting microservice-based architecture can be based upon the API Gateway routes.

The process of decomposing a monolith depends upon the complexity of your workload. Using strategies like the strangler pattern, you can migrate code from larger code bases to microservices. There are many potential benefits to running a Lambda-based application this way:

- Package sizes can be optimized for only the code needed for a single task, which helps make the function more performant, and may reduce running cost. Package size is a determinant of cold start latency, which is covered in chapter 6.

- IAM roles can be scoped to precisely the access needed by the microservice, making it easier to enforce the principles of least privilege. In controlling the blast radius, using IAM roles this way can give your application a stronger security posture.

- Easier to upgrade: you can apply upgrades at a microservice level without impacting the entire workload. Upgrades occur at the functional level, not at the application level, and you can implement canary releases to control the rollout.

- Easier to maintain: adding new features is usually easier when working with a single small service than a monolithic with significant coupling. Frequently, you implement features by adding new Lambda functions without modifying existing code.

- Easier to reuse code: when you have specialized functions that perform a single task, it’s often easier to copy these across multiple projects. Building a library of generic specialized functions can help accelerate development in future projects.

- Easier to test: unit testing is easier when there are few lines of code and the range of potential inputs for a function is smaller.

- Lower cognitive load for developers since each development team has a smaller surface area of the application to understand. This can help accelerate onboarding for new developers.

The function orchestrator

Many business workflows result in complex workflow logic, where the flow of operations depends on multiple factors. In an e-commerce example, a payments service is an example of a complex workflow:

- A payment type may be cash, check, or credit card, all of which have different processes.

- A credit card payment has many possible states, from successful to declined.

- The service may need to issue refunds or credits for a portion or the entire amount.

- A third-party service that processes credit cards may be unavailable due to an outage.

- Some payments may take multiple days to process.

Implementing this logic in a Lambda function can result in ‘spaghetti code’ that’s difficult to read, understand, and maintain. It can also become a very fragile in production systems. The complexity is compounded if you must handle error handling, retry logic, and inputs and outputs processing. These types of orchestration functions are an anti-pattern in Lambda-based applications.

Instead, use AWS Step Functions (or Azure Durable Functions) to orchestrate these workflows using a version-able, JSON-defined state machine. State machines can handle nested workflow logic, errors, and retries. A workflow can also run for up to 1 year, and the service can maintain different versions of workflows, allowing you to upgrade production systems in place. Using this approach also results in significantly less custom code, making an application easier to test and maintain.

While Step Functions is designed for workflows within a bounded context or microservice, to coordinate state changes across multiple services, instead use Amazon EventBridge or Azure Event Grid. These are serverless event buses that route events based upon rules, and simplify orchestration between microservices.

Recursive patterns that cause invocation loops (WTF seriously)

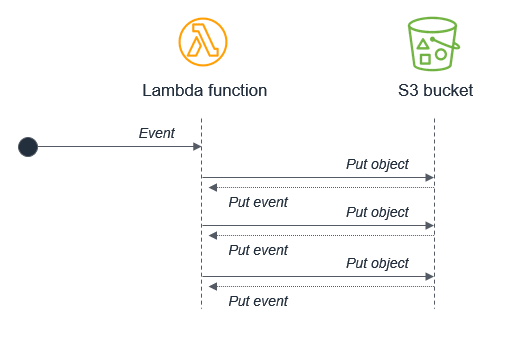

AWS services generate events that invoke Lambda functions, and Lambda functions can send messages to AWS services. Generally, the service or resource that invokes a Lambda function should be different to the service or resource that the function outputs to. Failure to manage this can result in infinite loops.

For example, a Lambda function writes an object to an S3 object, which in turn invokes the same Lambda function via a put event. The invocation causes a second object to be written to the bucket, which invokes the same Lambda function:

While the potential for infinite loops exists in most programming languages, this anti-pattern has the potential to consume more resources in serverless applications. Both Lambda and S3 automatically scale based upon traffic, so the loop may cause Lambda to scale to consume all available concurrency and S3 will continue to write objects and generate more events for Lambda. In this event, you can press the “Throttle” button in the Lambda console to scale the function concurrency down to zero and break the recursion cycle.

However, there exist valid reasons why one might look to design a serverless application that way: for example a Lambda function that asynchronously transcodes video to various formats by using the Elastic Transcoder or MediaConvert SDKs. In such a case, your design should reasonably expect to hear back from the transcoding service about two things:

- whether the video transcoding job finished successfully and

- where are the video sources stored (blob storage and CDN URLs)

This example uses S3 but the risk of recursive loops also exists in SNS, SQS, DynamoDB, and other services. In most cases, it is safer to separate the resources that produce and consume events from Lambda.

Functions calling functions

Functions enable encapsulation and code re-use. Most programming languages support the concept of code synchronously calling functions within a code base. In this case, the caller waits until the function returns a response.

When this happens on a traditional server or virtual instance, the operating system scheduler switches to other available work. Whether the CPU runs at 0% or 100% does not affect the overall cost of the application, since you are paying for the fixed cost of owning and operating a server.

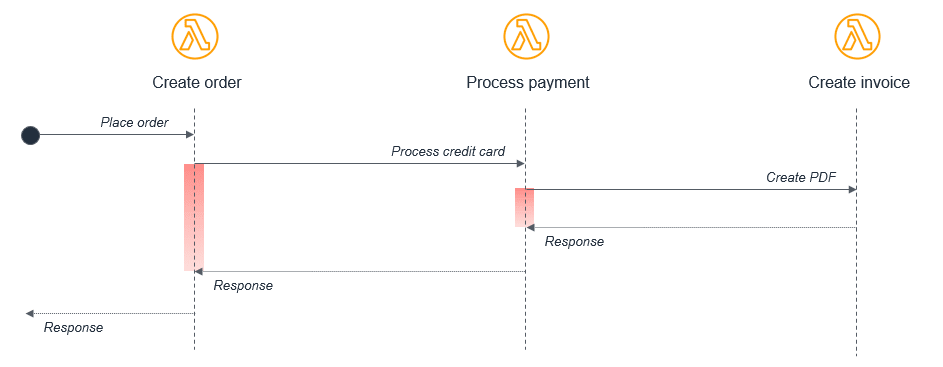

This model often does not adapt well to serverless development. For example, consider a simple ecommerce application consisting of three Lambda functions that process an order:

In this case, the Create order function calls the Process payment function, which in turn calls the Create invoice function. While this synchronous flow may work within a single application on a server, it introduces several avoidable problems in a distributed serverless architecture:

- Cost: with Lambda, you pay for the duration of an invocation. In this example, while the Create invoice functions runs, two other functions are also running in a wait state, shown in red on the diagram.

- Error handling: in nested invocations, error handling can become much more complex. Either errors are thrown to parent functions to handle at the top-level function, or functions require custom handling. For example, an error in Create invoice might require the Process payment function to reverse the charge, or it may instead retry the Create invoice process.

- Tight coupling: processing a payment typically takes longer than creating an invoice. In this model, the availability of the entire workflow is limited by the slowest function.

- Scaling: the concurrency of all three functions must be equal. In a busy system, this uses more concurrency than would otherwise be needed.

In serverless applications, there are two common approaches to avoid this pattern. First, use an SQS queue between Lambda functions (or Azure Service Bus). If a downstream process is slower than an upstream process, the queue durably persists messages and decouples the two functions. In this example, the Create order function publishes a message to an SQS queue, and the Process payment function consumes messages from the queue.

The second approach is to use AWS Step Functions (or Azure Event Grid). For complex processes with multiple types of failure and retry logic, Step Functions can help reduce the amount of custom code needed to orchestrate the workflow. As a result, Step Functions orchestrates the work and robustly handles errors and retries, and the Lambda functions contain only business logic.

Synchronous waiting within a function's execution context

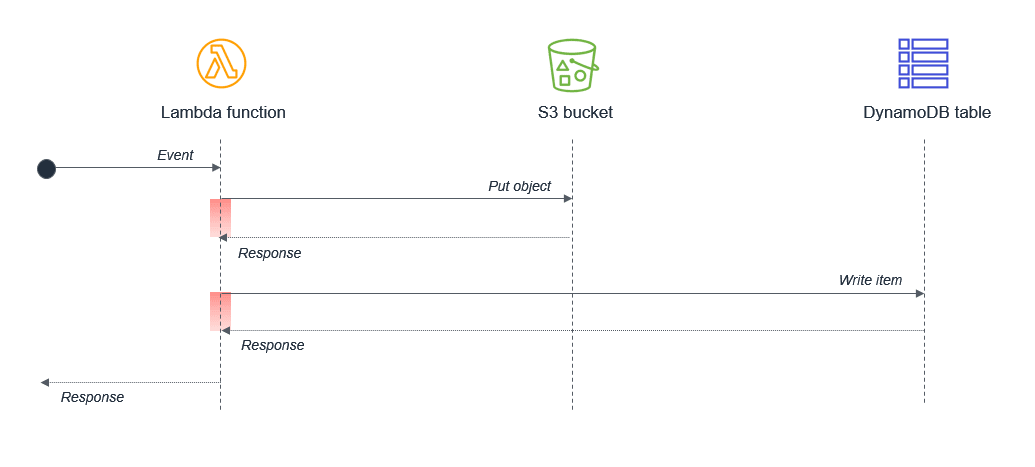

Within a single Lambda, ensure that any potentially concurrent activities are not scheduled synchronously. For example, a Lambda function might write to an S3 bucket and then write to a DynamoDB table:

The wait states, shown in the red in the diagram, are compounded because the activities are sequential. If the tasks are independent, they can be run in parallel, which results in the total wait time being set by the longest-running task.

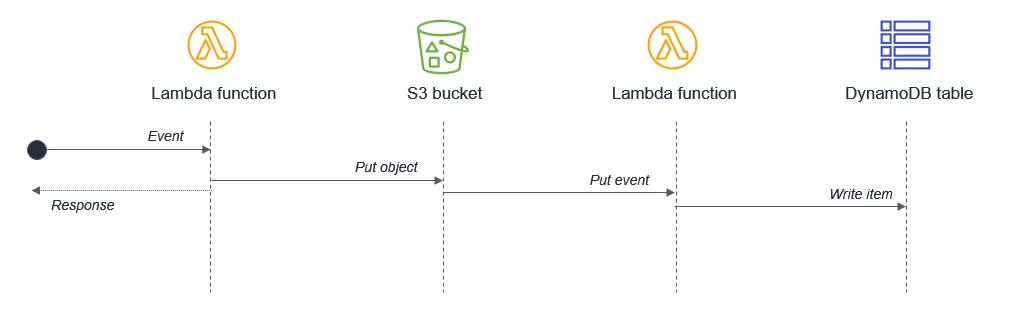

In cases where the second task depends on the completion of the first task, you may be able to reduce the total waiting time and the cost of execution by splitting the Lambda functions:

In this design, the first Lambda function responds immediately after putting the object to the S3 bucket. The S3 service invokes the second Lambda function, which then writes data to the DynamoDB table. This approach minimizes the total wait time in the Lambda function executions.

Finale

By this point, using the 3 blog posts in this event-driven architecture series, one should feel comfortable enough to dip their toes in designing a brand new serverless application or to migrate existing apps to serverless.

Next, it's time to dive into (read: code) creating a reusable framework for creating serverless applications in .NET! I will create a .NET Standard library that treats AWS Lambda function triggers as an abstraction that one may add as a reference to their brand new Lambda function project to address cross-cutting concerns such as logging, tracing and monitoring. In fact, the entry point for Lambda functions using this new library of ours, will look exactly like an ASP.NET Core project's Startup class!

This will reduce the boilerplate code necessary for creating new AWS Lambda functions in .NET considerably, improving team velocity and allowing your developers to focus on the thing that matters most: business logic.

References