Scale web and mobile video transcoding using serverless

Intro

Video is the undisputed content king.

As a media format, it is conducive to capturing and retaining ever bigger audiences for the past several years; look no further than the success the likes of TikTok, YouTube and Twitch have enjoyed. YoY video marking growth (which is a great indicator for video as an outreach medium) averages around 12.5% for the past 6 years (99firms, 2021). Video is projected to account for 82% of all consumer internet traffic next year!

There are a great many use cases for SaaS startups to utilize recording video, be it mobile or web video. However, there are numerous challenges with both the UX of such product features as well as the technical aspects of them.

Today we'll discuss some common challenges with handling video recording, transcoding and viewing in the web as well as lessons in dealing with cost as a system design constraint learned from the trenches.

Problem Statement

Users expect video recordings to be snappy; nobody wants to wait staring at a loader for more than a couple of seconds tops. In fact, studies suggest that this directly contributes to subscription churn which is the worst possible SaaS business outcome (Portent, 2019).

Serving video in various device/format combinations though is an expensive proposition; both figuratively as well as literally. The original video file will need to undergo a computing-intensive process called transcoding which will produce a different video file that is optimized, based on a set of parameters, for viewing in specific devices. Think .webm files for viewing in Chrome, .mp4 files for viewing in Safari and also the long list of mobile devices that have hundreds of different screen diagonal dimensions which you'll need to account for. You don't need hundreds of different formats, but you will need quite a few if you're going to serve high quality, device-optimized video content to your users.

Which gets us to the literally expensive part: costs will run rampart if your system design is naïve. Managed services like Amazon Elastic Transcoder will run in the tune of tens of thousands of $ per month for a few thousands video transcoding jobs per day and should you attempt to transcode the videos using an executable like FFMPEG running on EC2 instances, you are either a video veteran or someone who doesn't quite understand the inherent complexity of optimizing media content for consumption in commercial devices just yet: producing transcoded output for different kinds of devices often involves trial and error to find the right transcoding setting that play properly and look good to the end user. This trial and error process wastes compute resources. Finally, traditional encoding solutions don’t scale up and down with customers’ business needs. Instead, with traditional solutions, you need to guess how much capacity to provision ahead of time, which inevitably means either wasted money (if you provision too much and leave capacity underutilized) or delays to your business (if they provision too little and need to wait to run their encoding jobs).

The kind of system design we ended up with only makes sense if you have to treat a tight R&D budget as a system design constraint (and it almost always is). Unless you're Netflix: you then just do Netflix things instead: Netflix Innovator (amazon.com).

Definitions

FFMPEG

ffmpegis a very fast video and audio converter that can also grab from a live audio/video source. It can also convert between arbitrary sample rates and resize video on the fly with a high-quality polyphase filter.ffmpegreads from an arbitrary number of input "files" (which can be regular files, pipes, network streams, grabbing devices, etc.), specified by the-ioption, and writes to an arbitrary number of output "files", which are specified by a plain output url. Anything found on the command line which cannot be interpreted as an option is considered to be an output url. Each input or output url can, in principle, contain any number of streams of different types (video/audio/subtitle/attachment/data).

AWS Elastic Transcoder

Amazon Elastic Transcoder runs your transcoding jobs using the Amazon Elastic Compute Cloud (Amazon EC2). EC2’s scale means completing large transcoding jobs quickly and reliably. Amazon Elastic Transcoder is built to work with content stored in Amazon Simple Storage Service (Amazon S3), so it's durable and cost-effective storage for media libraries irrespective of the size thereof. You can even get notified about the status of your transcoding jobs via Amazon Simple Notification Service (Amazon SNS).

Amazon Elastic Transcoder features an AWS Management Console, service API and SDKs so you can integrate transcoding into your own applications and services.

To use Amazon Elastic Transcoder, you:

- Create a transcoding pipeline that specifies the input Amazon S3 bucket, the output Amazon S3 bucket and storage class, and an AWS Identity and Access Management (IAM) role that is used by the service to access your files.

- Create a transcoding job by specifying the input file, output files, and transcoding presets to use (you can choose from a set of pre-defined transcoding presets – for example 720p - or create your own custom transcoding preset). Optionally, you can specify thumbnails and job specific transcoding parameters like frame rate and resolution.

While you have transcoding jobs running on Amazon Elastic Transcoder, you can:

- Automatically receive status of your transcoding jobs via notifications.

- Query the status of transcoding jobs.

- Manage your transcoding jobs by stopping, starting or canceling them.

WebRTC

WebRTC adds real-time communication capabilities to your application that works on top of an open standard. It supports video, voice, and generic data to be sent between peers, allowing developers to build powerful voice- and video-communication solutions. The technology is available on all modern browsers as well as on native clients for all major platforms. The technologies behind WebRTC are implemented as an open web standard and available as regular JavaScript APIs in all major browsers. For native clients, like Android and iOS applications, a library is available that provides the same functionality. The WebRTC project is open-source and supported by Apple, Google, Microsoft and Mozilla, amongst others.

Background

Back in late 2016, I started working at a startup where I inherited a system for users of a web app to record video on their browsers, using the, then still experimental, WebRTC protocol. The file of the original user video would then be posted to an API that would do a couple of things:

- store this file in Amazon S3 blob storage and

- enqueue an Amazon Elastic Transcoder job for each of the pipelines defined.

Elastic Transcoder would then add those job outputs to that same S3 location as additional objects with a suitable naming convention so that our UI clients (web and mobile) know which ones to look for.

Here's the rub:

- For this system, video transcoding was happening using AWS Elastic Transcoder to produce various media outputs (Amazon MediaConverter wasn't a thing back then).

- But our EC2-hosted web servers would also play a critical part: we had designed computing tasks for product features, such as taking any number of thumbnails from a video, overlaying corporate logos and text over those etc.; product features that couldn't be satisfied by Elastic Transcoder due to the service's usage restrictions. So, we'd use FFMPEG for those, running on those machines which also served traditional web server workloads and thus when a video transcoding job would come in, FFMPEG would monopolize the CPU of the EC2 instance.

- Video transcoding is a CPU-bound process, so these EC2 instances would grind down to a halt due to CPU usage reaching and remaining around 100%.

- Users had to synchronously wait for their video to be processed and because of the previous points the whole experience was slow for longer, high quality videos.

To address some of these UX shortcomings, we introduced artificial quotas like restricting videos to 5 minutes long or cap the quality at 720p; both of which made no sense business-wise. If anything, these illogical, from the user's PoV, system constraints were hurting our product.

A year and a few churned subscriptions later, we now had the leverage to budget development time to design a new system, one that will address the challenges of the original and build on the domain knowledge we gained since.

Architecture Decision Record

There are a couple of managed services in this solution space on AWS:

- AWS | Amazon Elastic Transcoder - Media & Video Transcoding in the Cloud

- AWS Elemental MediaConvert (amazon.com)

As well as many ways to host a system of our own design, such as:

- EC2 instances (Virtual Machines),

- Any one of the 37 different ways of running containerized workloads on AWS or

- Lambda (serverless functions).

Our primary goals were to:

- remove any computing tasks related to video features from our web servers,

- increase the system's elasticity by design; scaling computing resources up and down based on system usage and

- drive costs down compared to the first iteration of the system.

At the same time, we had limited resources and time to dedicate to this project so we were keen on keeping changes to a minimum while re-designing the system to meet the goals outlined above.

We knew we had to leverage Lambda somehow, to achieve goals #2 & #3 and so we had to come up with a way to refactor all the existing computing tasks from EC2 to Lambda to achieve #1.

Design

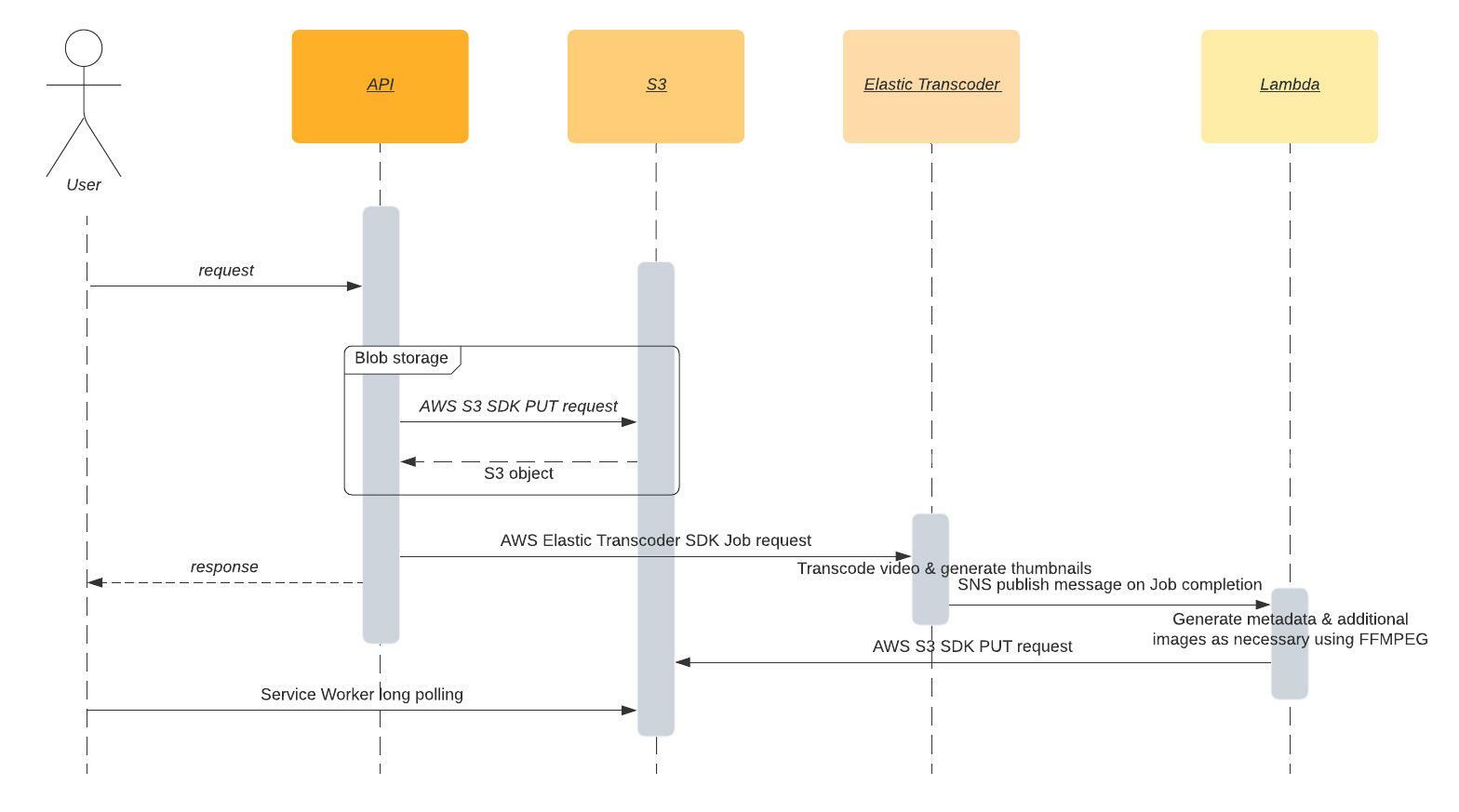

This is the system we ended up with:

It should be noted that while it would be technically feasible to refactor the transcoding workload from Elastic Transcoder to Lambda, there are system limitations in Lambda (chief among those the 15 minutes duration of a single function invocation) that prevented us from going that route with our design.

If we were to make the same decisions we made in 2017-18 a couple of years later, we might have chosen Amazon MediaConvert instead. The service offers a great range of contemporary transcoding templates out-of-the-box such as Apple HLS or .webm with VP9 codex and yet has a fairer on-demand pricing model compared to Elastic Transcoder since the minimum billing duration is per 10 second segments versus 1 minute long for the latter. Effectively, this means that for transcoding a 9 seconds long video, you'll be charged for 10 seconds of computing by MediaConvert versus 60 seconds of computing by Elastic Transcoding.

Implementation

UI Clients

We've had both web (Angular) as well as mobile (Xamarin) UI clients for our product, but I imagine the following applies irrespective of the tech stack:

- The client performs a POST request to the relevant API Gateway endpoint with the original video file.

- The client then utilizes a polling technique, in this case HTTP requests that timeout and a Circuit Breaker pattern, to keep polling the Amazon S3 bucket where the transcoded videos will eventually reside. So long as the client keeps getting a 404, the videos aren't there. Obviously😉

I should also mention that it's possible to skip hitting an API of your design and implementation altogether, by directly using the AWS SDK for S3 in your client code and streaming the data over to a S3 bucket. There exist product or technical reasons why this might or might not be the optimal thing to do, depending on your product and use case, but this is a discussion for another day.

API Gateway

In this design, the API gateway does a couple of things:

- It persists the original video file in S3 blob storage and

- Uses the response of the AWS SDK for S3, which contains the video's object URL in S3, to enqueue multiple Elastic Transcoder job; one for each pipeline (each pipeline template represents a different transcoding format, optimized for a specific device/audience).

One might wonder why we did not skip the second part entirely by utilizing an S3 PUT event on the relevant bucket but unfortunately, Elastic Transcoder is not a valid destination for an S3 event notification; only SNS, SQS, Lambda and EventBridge are.

S3

The S3 bucket containing the static media files of this system is mirrored by a CDN distribution network in Amazon Cloudfront for faster delivery to end users, utilizing edge computing closer to the physical location of the user's IP address.

Elastic Transcoder

Our usage of Elastic Transcoder did not change over the course of this re-design process but we added one significant element to facilitate the communication between Elastic Transcoder and Lambda: once an Elastic Transcoder Job ran to completion successfully, it would publish a notification to the SNS topic that the Lambda function is subscribed to.

Lambda

Our Lambda function would generate thumbnails and overlay user-generated information on videos (among other features), then persist the media on the same S3 object location where the UI clients are looking for those.

There exist some rather significant technical challenges with running FFMPEG on AWS Lambda functions (those are probably mitigated some when running AWS Lambda containers but I haven't actually tried this). These challenges are not specific to the FFMPEG binary, but rather related to the Amazon Linux 2 AMI for Lambda which has to make certain compromises; aka lacks many of the libs commonly found in other popular Linux distros. I have compiled a list of the things one needs to do to accomplish that in the second half of this post:

Conclusion

This post is, yet again, all about serverless, event-driven architectures; S3, SNS and Elastic Transcoder events in this system's case.

It's probably more convenient and most likely better from a time-to-market perspective (but not nearly as cost-effective) to use a service like Amazon MediaConvert when it comes to transcoding and delivering video to your end users nowadays but I thought this serverless video transcoding system design might be interesting to blog about as an alternative and a reference point for readers who might be looking to integrate scalable and cost-optimized video features in their products.

What's next

In my final entry in this event-driven, serverless architectures blog series (which has now spanned the entirety of my 2021 blogging activity) will be about designing SQL-based stores on AWS when your system's primary source of truth is NoSQL, aka how to stream data from a store like DynamoDB to a SQL database like PostgreSQL hosted in Amazon RDS or Amazon Aurora in near real-time with eventual consistency.

There are a few possible designs and more than a few reasons why a SaaS startup might be interested in creating such a reporting engine (or even forming a Data Lake) in AWS so I'll be going over those in some detail.

References